In a recent podcast episode, industry experts discussed the future of AI models and their development. This conversation provided valuable insights into how the AI landscape is evolving and what we can expect in the near future. Below are six key takeaways, plus three actions on how AI on IBM Power can help transform your organization:

1. Pre-trained models will be abundant.

The speakers agreed that in the near future, there will be many pre-trained models available for various problems.

This means that companies won’t need to create new models from scratch but can instead focus on customization and fine-tuning these existing models. This trend is underway, with numerous open-source models being developed and shared by researchers and organizations worldwide.

2. Customization and fine-tuning will be the focus.

With pre-trained models readily available, companies can now concentrate on customizing and fine-tuning these models to suit their specific needs. This process involves adapting the pre-trained models to work with their unique data sets and use cases. By doing so, companies can leverage the power of AI without having to invest significant resources in developing new models from scratch.

3. NVIDIA’s role in the customization process

NVIDIA can position itself as a provider of tools and resources for this customization process.

The company has already made significant strides in this area by offering platforms like CUDA, cuDNN, and NVIDIA TensorRT, which enable developers to optimize their AI models for better performance and efficiency. By focusing on providing these tools, NVIDIA can help companies streamline their customization process and make the most of pre-trained models.

CUDA

A parallel programming model and direct API that GPUs use to communicate with machine learning deployments. CUDA is a standard feature of all NVIDIA GPUs, including GeForce, Quadro, and Tesla.

cuDNN

An inference library that provides kernels for Tensor Cores to improve performance on compute-bound operations. cuDNN is an optional dependency for TensorRT, and is only used to speed up a few layers.

NVIDIA TensorRT

A library that runs on CUDA cores to optimize deep learning models for faster inference on NVIDIA GPUs. TensorRT can be 4–5 times faster than other methods for many real-time services and embedded applications.

4. AI models as high fashion items

The speakers also discussed the idea of AI models becoming more like high fashion items, with prestige and value in their own right. This concept suggests that AI models will not just be commodities but also have a certain level of cachet. Companies may compete to use the most advanced or prestigious models, much like how they might choose high-end fashion brands for their products.

5. IBM’s Granite models as an example.

IBM’s Granite models were mentioned as an example of this trend. These models are designed to be highly performant and efficient, making them attractive options for companies looking to fine-tune their AI solutions. By being found on NVIDIA platforms, these models can reach a wider audience and further solidify their status as prestigious AI tools.

Granite 3.0

Small Language Models (SLMs) for Enterprise

Workhorse’ models

- Granite-3.0-8B-Instruct

- Granite-3.0-2B-Instruct

Inference-efficient Mixture of Experts (MoE)

Extremely efficient inference and low latency

- Granite-3.0-3B-A800M

- Granite-3.0-1B-A400M

Guardrail models

Advance safe and trustworthy AI

- Granite-Guardian-3.0-8B

- Granite-Guardian-3.0-2B

Trusted

Trained on trusted and governed data relevant to enterprise domains.

- Trained over 12 trillion tokens

- 12 different natural languages

- 116 different programming languages

- Using a novel two-stage training method

- Available under Apache 2.0 on Hugging Face

Granite Guardian 3.0 models

•Comprehensive risk and harm detection

Offer extensive harm detection capabilities, covering social bias, hate, toxicity, profanity, violence, jailbreaking, and specialized RAG checks like groundedness and answer relevance.

•Industry-Leading Accuracy in Safety and Hallucination Detection

In tests across 19 benchmarks, Granite Guardian 3.0 demonstrated superior accuracy in harm detection, surpassing Meta’s Llama Guard models, and showed performance on par with hallucination detection specialists WeCheck and MiniCheck.

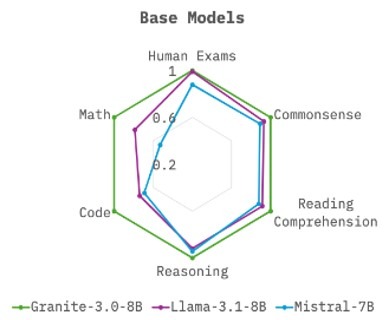

Performant

Top Performance on Academic and Safety Benchmarks

The Granite 3.0 8B Instruct model consistently leads in performance, surpassing similar-sized open-source models from Meta and Mistral on Hugging Face’s OpenLLM Leaderboard and IBM’s AttaQ safety benchmark across all safety dimensions.

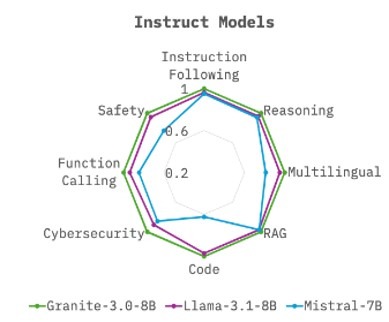

Excellence in Enterprise and Cybersecurity Tasks

It excels in enterprise tasks like RAG, tool use, and cybersecurity, demonstrating superior accuracy.

6. The future of AI development

As AI continues to evolve, it will be interesting to see how these predictions play out in the real world. The trend towards pre-trained models and customization is already underway, and companies are increasingly leveraging these resources to build their AI solutions. By staying informed about these developments, we can better understand the future of AI and its potential impact on various industries.

Conclusion

The podcast provided valuable insights into the future of AI models and their development. With pre-trained models becoming more abundant, companies will focus on customization and fine-tuning, and NVIDIA can position itself as a provider of tools for this process. Additionally, the idea of AI models becoming high fashion items adds an interesting dimension to the world of AI, with prestige and value associated with these models. As we move forward, it will be exciting to see how these trends shape the AI landscape and influence the development of new technologies.

Discover how AI on IBM Power can help transform your organization.

INFORM YOURSELF

Explore AI on IBM Power yourself with blogs and demos in 30 minutes or less, delivered through the IBM Power Developer eXchange community.

GET BRIEFED

Meet with an IBM AI expert for custom demonstrations of AI on IBM Power capabilities, values, and roadmaps. Understand where AI can impact your organization in 2-4 hours.

START PRODUCTIZING AI

Request a use case alignment workshop to drive toward your first proof-of-concept in 1-4 weeks.

AI on IBM Power: Use Case Alignment Workshop

Connect your line of business and technical teams with the IBM team in an interactive workshop experience to identify and understand your AI on Power use cases and align on the business outcome.

Workshop Outcomes

- Generate prioritized list of use-cases

- Align Business and IT on a single use-case

- Business opportunity statement and potential value

What we need from you:

- Ideas for leveraging AI to support business priorities

- 4 to 8 workshop participants (ideally) spanning Line of Business, IT/Development and Leadership

Potential Activities

- AI landscape

- Generative AI Creativity Matrix

- Business value prioritization

- Business & Technical Feasibility Assessment

- Use-case prioritization matrix

- Business opportunity statement

Credit

IBM Fellow

IBM Research Scientist

Host Tim Hwang is joined by Kate Soule, Kush Varshney and Petros Zerfos

Granite 3.0, NVIDIA’s Nemotron AI model, and Perplexity’s fundraising

Ashwin Srinivas | AI Product Manager

Thanks and credit to tungnguyen0905 for the blog image

Leave a Reply