In a groundbreaking presentation at the IBM TechXchange 2024, William Starke, IBM Distinguished Engineer and POWER Processor Chief Architect unveiled how IBM Power is pioneering the next major era in computing: the Memory Era. This shift comes at a crucial time when memory capabilities are becoming increasingly critical for enterprise computing and AI workloads.

The Evolution of Computing Eras

Computing has progressed through distinct eras, each characterized by challenges and innovations. From the Clock Speed Era of the 1980s through 2007, when processors reached speeds of 5+ GHz, to the Multi-core Era, which brought us processors with 100+ cores, we’ve now entered what many consider the Memory Era. This transition comes at a crucial time when memory capabilities are becoming increasingly critical for enterprise computing and AI workloads.

Understanding the Computing-Memory Gap Crisis

Over the past two decades, computing power has experienced exponential growth:

- 100x increase in transistors per processor chip

- 112x improvement in rPerf (relative performance) scores

- 140x growth in CPW (Commercial Processing Workload) scores

However, traditional DDR DRAM bandwidth has only managed a 28x increase per unit of processor edge area, achieved through:

- 16x improvement in DDR signalling speed (from 200 MHz to 3200 MHz)

- 1.75x increase in solder ball density (from 29 to 51 signals per mm²)

- This growing disparity between computing power and memory capabilities has created a significant bottleneck in system performance.

Current Industry Approaches and Their Limitations

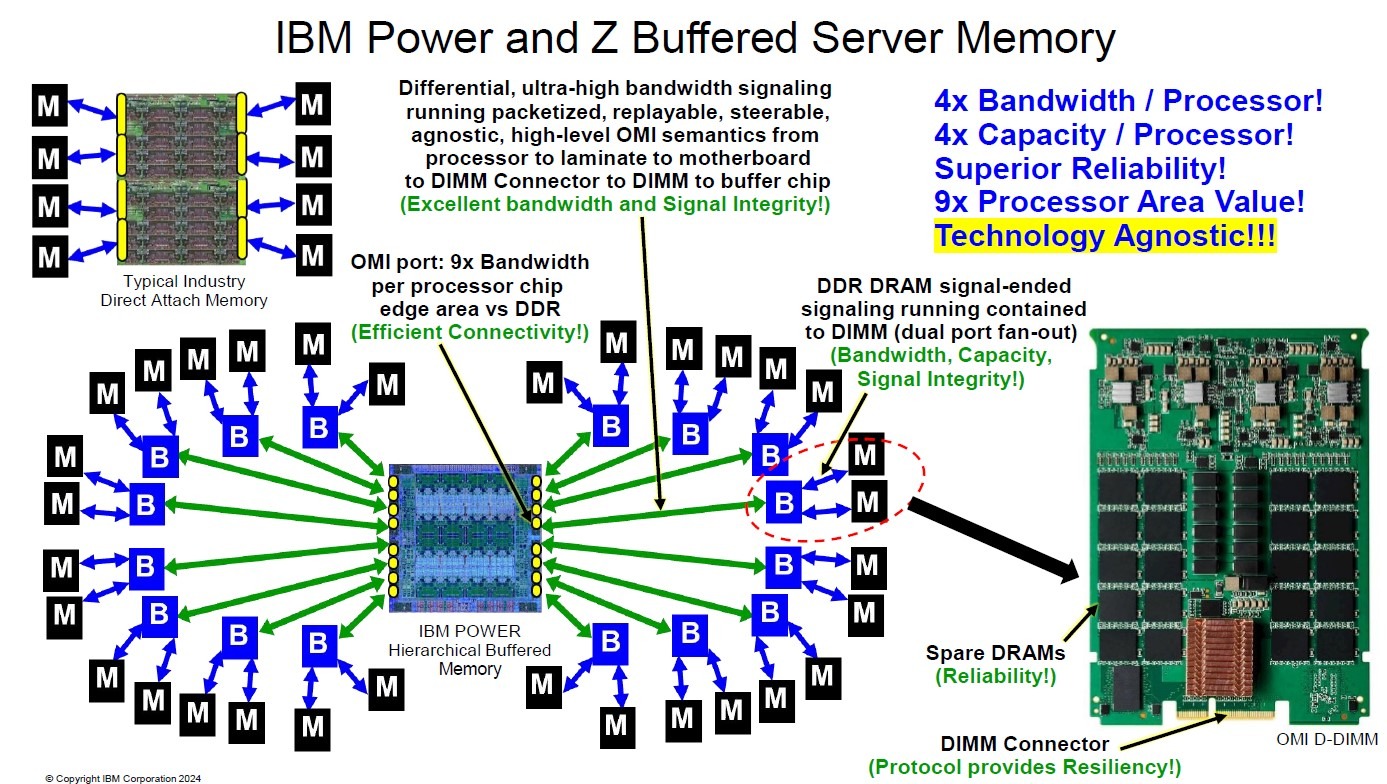

Direct Attach Memory

The typical industry approach uses direct-attach DDR memory, which faces several challenges:

- Limited bandwidth due to signal integrity issues

- Poor reliability with signal-ended signaling

- Inefficient use of processor chip edge area

- Memory locked to specific DDR vintages

CXL Far Tier Memory

While intended for new storage class memory technologies, CXL (Compute Express Link) has been repurposed to address DDR4 obsolescence:

- Higher latency compared to main memory

- Limited bandwidth

- Complexity in managing hybrid memory systems

- Primarily serving as a solution for recycling older DDR4 memory

HBM (High Bandwidth Memory)

Used primarily with accelerators like GPUs:

- Extremely high bandwidth but very limited capacity

- Reliability concerns

- Rigid attachment limiting flexibility

- Expensive and complex packaging requirements

IBM Power’s Revolutionary Solution

IBM’s approach revolutionizes server memory architecture through Hierarchical Buffered Memory with OMI (OpenCAPI Memory Interface).

Technical Innovations

- Advanced Signaling Technology

- Differential, ultra-high bandwidth signalling

- Packetized, replayable, and steerable communications

- High-level OMI semantics ensuring signal integrity

- Buffer Architecture

- DDR controller integrated into a buffer

- Dual-port fan-out for improved bandwidth

- Contained DDR signalling within DIMM

- Spare DRAMs for enhanced reliability

- Historical Evolution

- Late 1990s-2010s: Good latency with puppet-string buffers

- 2014: Centaur Agnostic Buffer introducing better latency

- 2021: OMI Buffer achieving best latency (less than 10ns adder beyond DDR direct)

Quantifiable Advantages

- 4x higher bandwidth compared to traditional solutions

- 4x greater memory capacity per processor

- 9x more efficient use of processor chip edge area

- 156x growth in memory bandwidth since POWER4 with OMI DDR5

Key Differentiators

- Technology Agnostic Design

- Memory and processors can be upgraded independently

- Future-proof architecture accommodating new memory technologies

- No lock-in to specific DDR generations

- Superior Reliability

- ECC protection

- Differential signalling

- Spare DRAM support

- Contained DDR signals within DIMM

- Scalability and Composability

- Flexible architecture supporting various configurations

- Efficient scaling for large memory deployments

- Better resource utilization through memory disaggregation

Impact on Enterprise Computing and AI

Enterprise Applications

The architecture is particularly beneficial for memory-intensive applications like SAP HANA, where:

- High memory bandwidth is crucial for real-time analytics

- Large memory capacity enables bigger in-memory databases

- Reliability is essential for business-critical operations

- Composable scale allows for flexible resource allocation

AI Workloads

As AI models continue to grow, IBM’s solution offers unique advantages:

- Combined high bandwidth and capacity supporting larger models

- Efficient memory hierarchy for AI training and inference

- Flexible architecture accommodating evolving AI requirements

- Cost-effective scaling compared to HBM-only solutions

Future Outlook

IBM Power’s memory architecture positions it as a leader in the Memory Era, particularly for:

- Enterprise Computing

- Growing demand for in-memory databases

- Real-time analytics requirements

- Mission-critical reliability needs

- AI and Machine Learning

- Expanding model sizes

- Increasing memory bandwidth requirements

- Need for flexible scaling

- Hybrid Workloads

- Combined traditional and AI workloads

- Dynamic resource allocation – Varied performance requirements

Conclusion

As we progress deeper into the Memory Era, IBM Power’s innovative approach to memory architecture provides a compelling solution to the growing computing-memory gap. While competitors struggle with hybrid solutions combining various technologies, IBM’s unified approach through OMI offers a cleaner, more efficient, and more scalable solution. This technological advantage becomes crucial for next-generation applications requiring high memory bandwidth, capacity, reliability, and composable scale. The success of this architecture validates IBM’s long-term vision and investment in memory technology, positioning IBM Power Systems as the platform of choice for future enterprise computing and AI workloads.

Credit:

Errors are my own, credit to Bill & TechXchange 2024.

William Starke, IBM Distinguished Engineer and POWER Processor Chief Architect

IBM Power’s Differentiated Memory Roadmap

IBM TechXchange 2024

This session explores the historical importance of Power’s memory architecture, reviews recent developments in Power’s memory roadmap, and highlights the strengths of Power’s memory architecture going forward versus developments in industry memory architectures.

Leave a Reply